Kubernetes as a modern platform for hosting enterprise applications

Currently, many companies that are using conventional on-premise VM based or VDI hosting are facing significant challenges. Some of these challenges include:

- A very long release cycle

- Inconsistent reliability and availability

- A high total cost of ownership (TCO) for infrastructure

- Long cycles for creating new services and allocating computing resources for them on demand

- A very long and capital intensive scaling-up process

- Inefficient usage of computing resources

In search of a new platform that will solve these issues, companies turn to cloud providers who offer various services for application hosting. The requirements list is long. The platform must have a minimum cost of ownership, operate with different types of load (both traditional and multi-tier applications with data access layer), and be reliable and scalable. Additionally, adapting existing applications to deploy on the new platform should be as easy and as possible, without requiring that source codes be changed. And finally, the processes of creating new services and deploying existing ones should be minimal and require little effort.

Sound like a tall order? In this article, we will introduce you to a platform that meets every one of these requirements. That platform is called Kubernetes.

What is Kubernetes?

Kubernetes is a portable and extensible open-source platform for automating the deployment, scaling, and management of applications, run as Docker containers. Originally developed by Google, the technology has now been transferred to the Cloud Native Computing Foundation (CNCF). Today, Kubernetes is used by many large organizations, as well as startups, as a platform for deploying services. This is due to the fact that Kubernetes has a number of advantages over other platforms:- High reliability and availability

- Built-in scalability

- Efficient use of computing resources

- Microservice architecture support for Stateful and Stateless services

- Support for a variety of application deployment strategies, including Canary deployment, Blue/Green deployment, etc.

The Kubernetes architecture

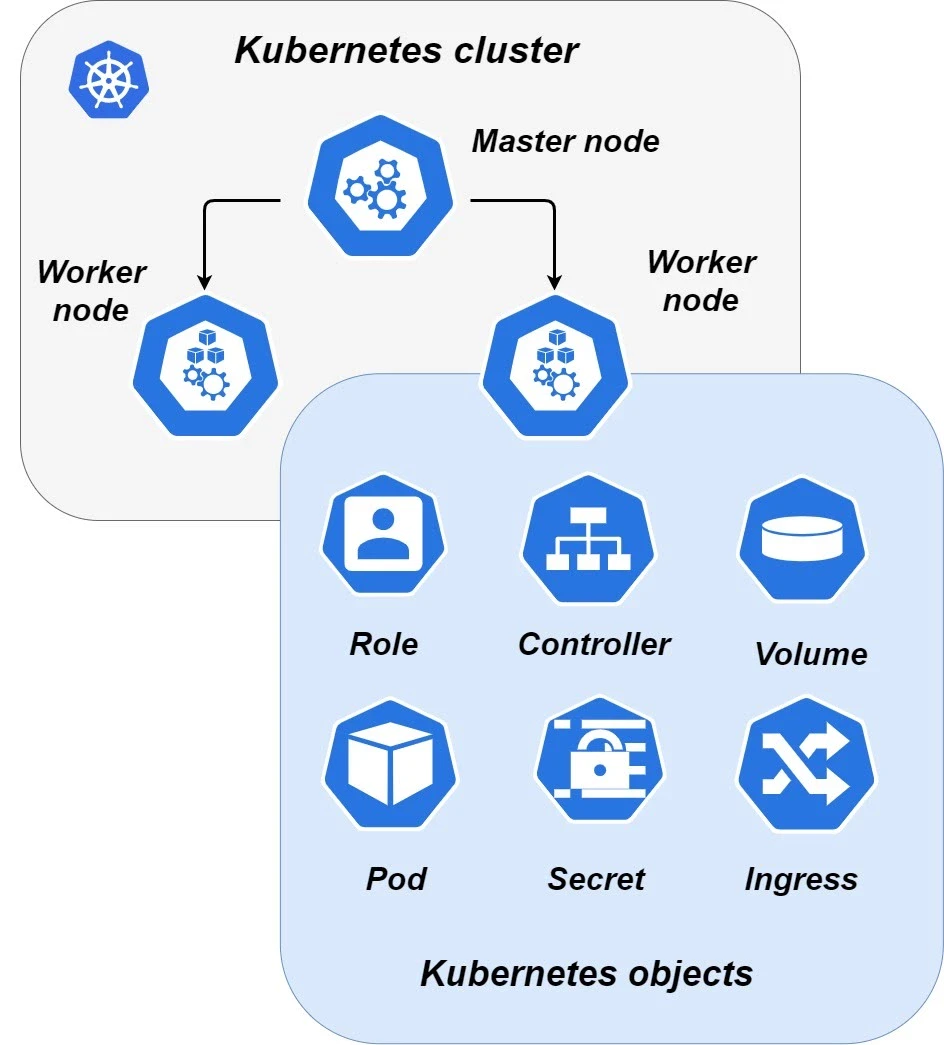

The Kubernetes architecture includes a failover cluster that consists of one master node and one or more worker nodes. The role of the worker nodes is to place various Kubernetes objects, each of which is described by a declarative template notation. The base execution unit of Kubernetes is called Pod — a minimum executable unit of a deployed application.

Now let’s look at how you can deploy a reliable service using Kubernetes by using one of the Kubernetes objects, the Deployment Controller.

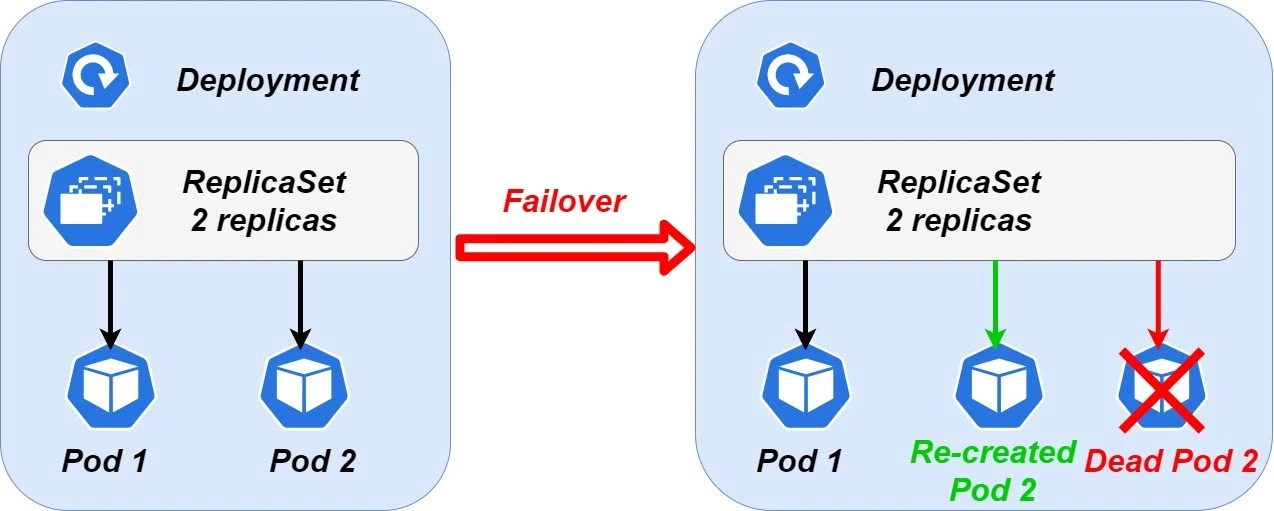

A Controller can create and manage multiple Pods for you, handling replication and rollout and providing self-healing capabilities at cluster scope. For example, if a Node fails, the Controller might automatically replace the Pod by scheduling an identical replacement on a different Node.

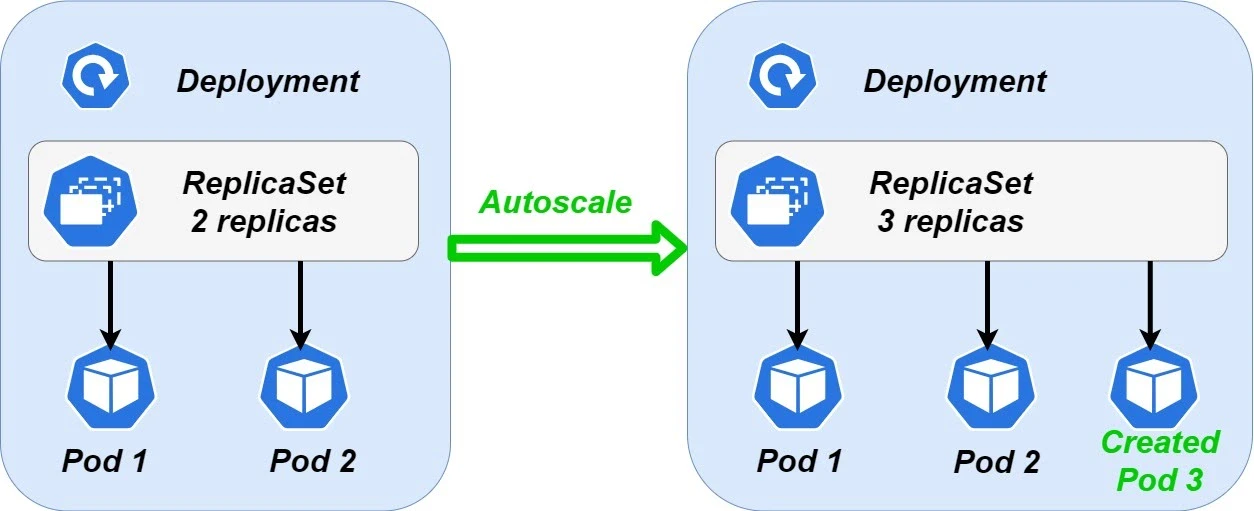

A ReplicaSet controller’s purpose is to keep a stable set of replica Pods running at any given time. As such, it is often used to guarantee the availability of a specified number of identical Pods. A Deployment provides declarative updates for Pods and ReplicaSets.

Deployment and ReplicaSet also provide automatic scaling of your service by creating new pods as soon as resource utilization has reached a threshold.

The Kubernetes platform itself is quite complex to administer and maintain, so all leading cloud platforms offer managed Kubernetes clusters. Additionally, these providers offer cloud services that allow you to create complete PaaS platforms to deploy your services and integrate them with your on-premise solution.

How do you prepare your application for a Kubernetes migration?

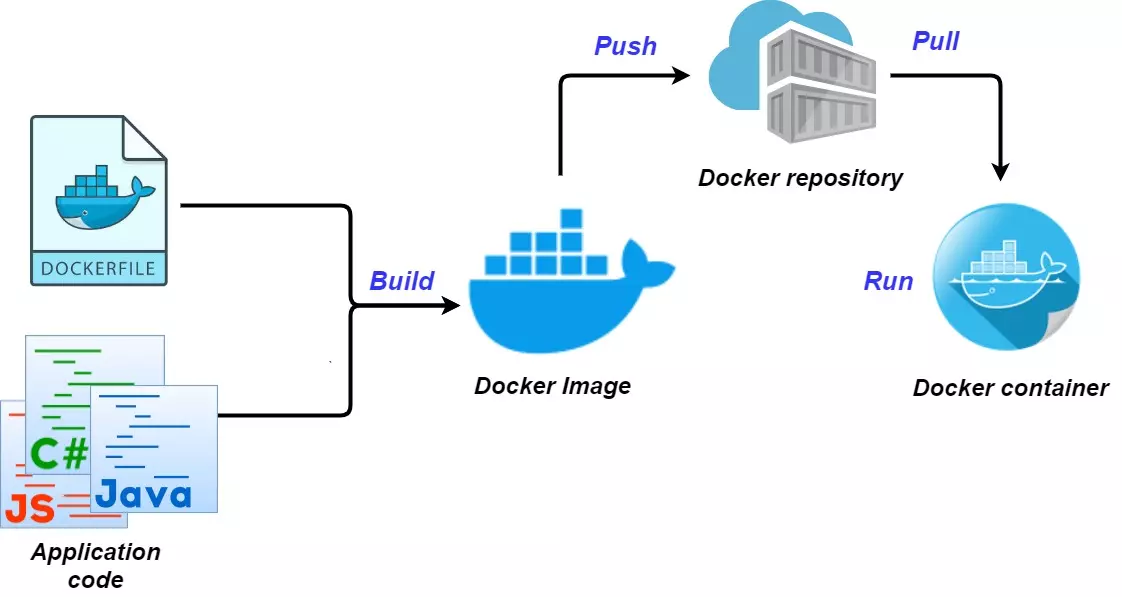

Migrating your application to Kubernetes through Docker is possible for very many programming languages and frameworks, which makes your Kubernetes journey easier compared to other platforms. The process involves migrating to Docker, then creating configuration templates that will describe the Kubernetes objects.

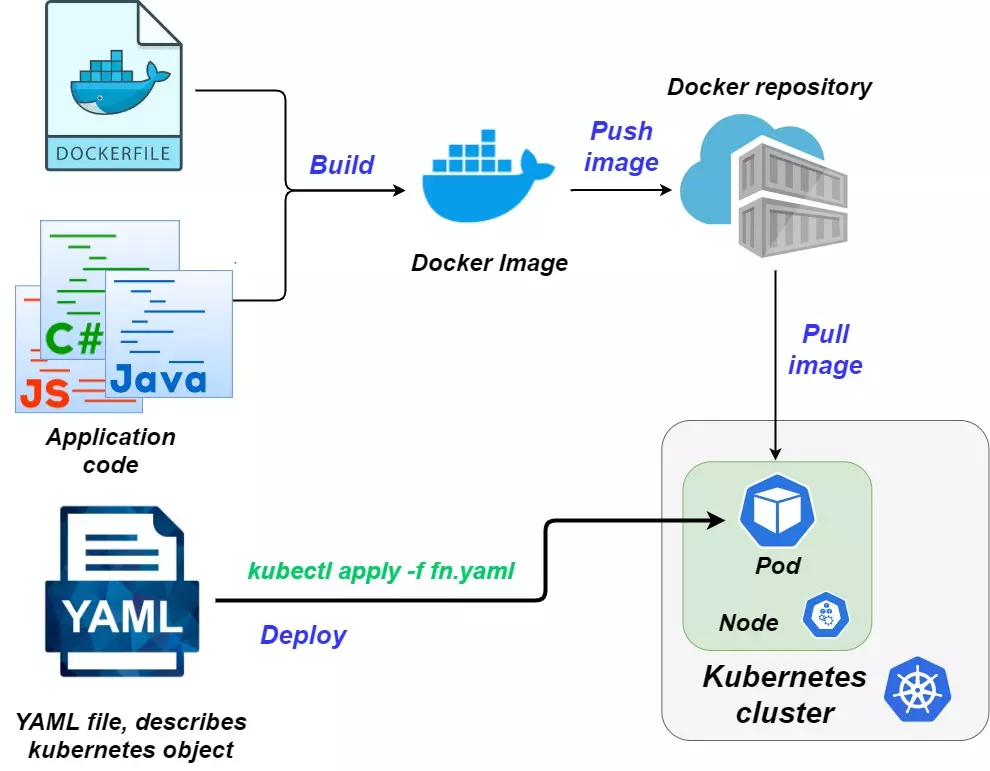

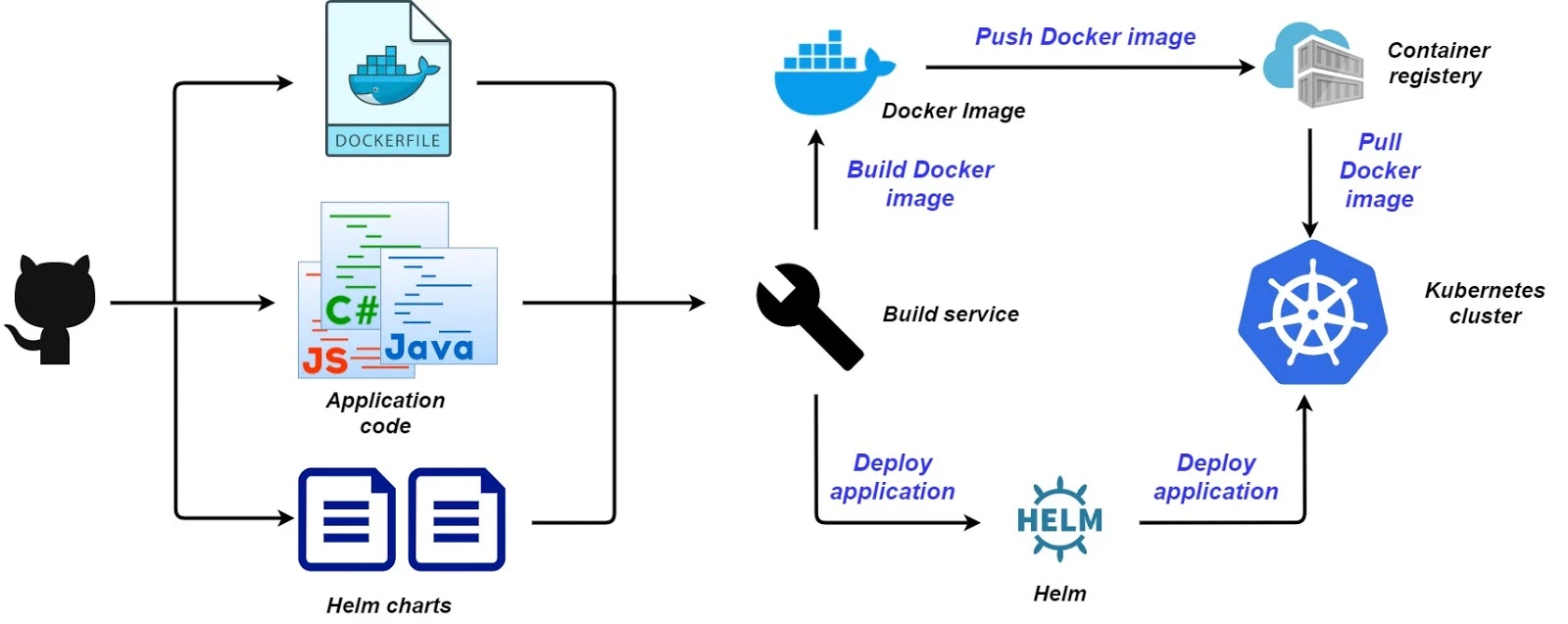

In order to migrate your application to Docker, you must create a Dockerfile text file that describes all the dependencies needed to run your application as a Docker container. Next, you need to create a Docker image build pipeline, which uses your application code and Dockerfile to create the Docker image. The Docker image is a portable unit ready to be launched as a Docker container. The Docker image is stored in a Docker repository, where it is pushed after the build, and from where it is pulled out to run the container.

In order to run a container on a Kubernetes cluster (it will be a Kubernetes pod), you need to add Kubernetes deployment templates to your application code. These templates will describe the Kubernetes objects you need to create for your application to work, including pods, secrets, configurations, Deployments, Ingresses, and others.

Real services deployed on Kubernetes can consist of many Kubernetes objects, while real applications can consist of many services. To facilitate their deployment on a cluster to manage multiple objects as a single entity, you can use a specialized Kubernetes package manager called Helm. In this case, Helm templates (https://helm.sh/) are used instead of Kubernetes YAML files.

In order to take full advantage of Kubernetes, your application must meet the requirements of the 12-factor app (https://12factor.net/). But Kubernetes also supports hosting of traditional, N-tier applications, as well as completely stateful applications such as relational databases, message brokers, etc.

How your business can benefit from Kubernetes

Using Kubernetes in the cloud gives your business a number of advantages that range from maximizing your cloud computing resources to faster and more convenient releases. Here’s a full break-down of the benefits:

- By using cloud services, you can significantly reduce your TCO. This is achieved by automating the deployment process and scaling the infrastructure. In cloud environments, computing resources are created and destroyed on demand, and you only need to pay for actual usage. You no longer need to make any investments in physical infrastructure (capital expenditure, CapEx) or its maintenance (operational expenditure, OpEx). Most of the administration tasks can be automated and performed without the SysOps team or with minimum involvement. Just like with OpEx, you only need to pay for the cloud services.

- Using Docker as a deployment platform will allow you to maximize the use of cloud computing resources. For instance, CPU and memory of worker node usage can be increased by up to 80-90% so that more services can be deployed in the same worker node’s VM, or the same set of services can be deployed on the smaller (and therefore cheaper) worker node’s VM.

- The availability of your service deployed on the cluster will increasethanks to Kubernetes’ built-in automatic scaling and reliability assurance system—even if a portion of the worker node fails.

- You can make releases quickly and conveniently using Kubernetes’ The application deployment technology. Since each Kubernetes object is described with a special notation and any solution on Kubernetes consists of a set of similar objects, it will take little time to create new services. Entities should not be multiplied without necessity— you will use existing Kubernetes objects as building blocks to create very complex platforms on it.

If you feel that cloud cost reduction can have a significant impact on your business, and you need assistance to make it happen, don’t hesitate to contact us at contact@fastdev.se and we’ll be happy to help.